AI is now writing code at scale - but who’s checking it?

As Generative AI (GenAI) reshapes the software development landscape, the risks and complexities around managing what gets built, where it comes from, and how it’s secured are growing just as fast. The Cloudsmith 2025 Artifact Management Report dives into this shift, offering critical insights into how teams are adapting their infrastructure and software supply chain security practices in response to the AI-generated code.

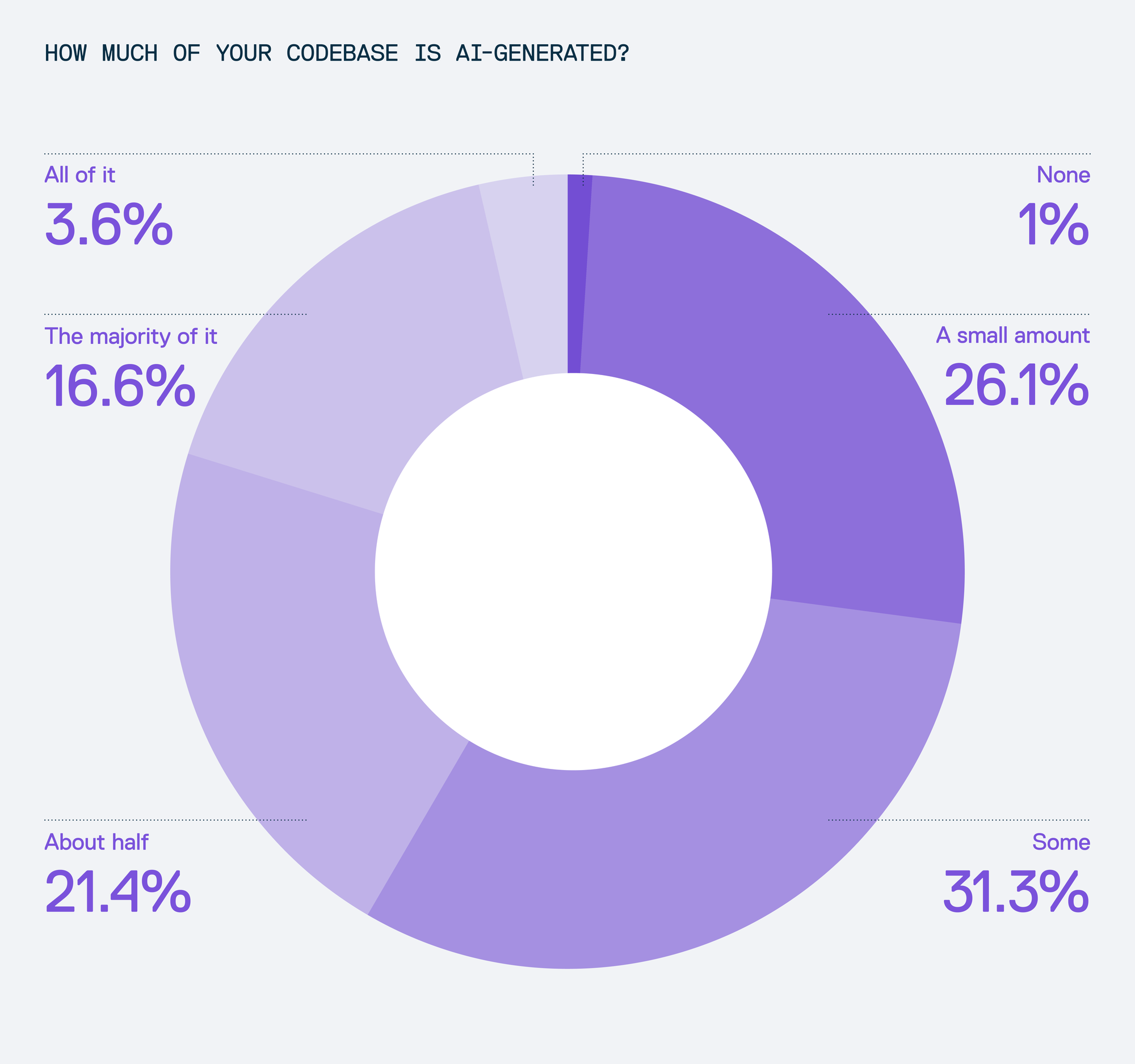

Half of codebases are now AI-generated

According to our survey, 42% of developers using AI report that at least half of their codebase is now generated by AI tools. Where in 2024 this might have been considered a theoretical shift, in 2025 we need to accept that this is not a practical reality reshaping how software is written. AI isn't just a helper on the sidelines; it’s actively contributing to the core of modern applications and transforming the day-to-day workflow of developers. While the rapid rise of GenAI is often seen as a leap forward in productivity and innovation, it also introduces new security risks and software supply chain vulnerabilities that can’t be ignored.

Attackers Are Targeting the Software Supply Chain

Adversaries are increasingly shifting their focus from traditional infrastructure attacks to targeting the software supply chain itself. Rather than breaching networks directly, they’re infiltrating the ecosystem through techniques like slopsquatting (the creation of malicious packages with names deliberately designed to mimic legitimate ones).

These deceptive packages are easy to overlook, especially in fast-paced development environments. Developers, often under tight deadlines and relying heavily on AI-assisted tooling to manage dependencies, may unknowingly pull in these malicious components without thorough vetting. The result is a stealthy but highly effective method for attackers to introduce vulnerabilities directly into production code.

AI and automation: a double-edged innovation

Survey respondents anticipate that GenAI-driven automation will bring both benefits and complications, which should accelerate development workflows while also straining infrastructure with unpredictable behaviors and dependency complexities. Tools like GitHub Copilot and Anysphere Cursor promise greater speed and efficiency in coding, but they also raise new red flags for security teams. As automation becomes more deeply embedded in the development process, the potential for AI-generated code to introduce unknown risks or unstable dependencies grows, which challenges the long-held assumptions about trust and reliability in the software pipeline.

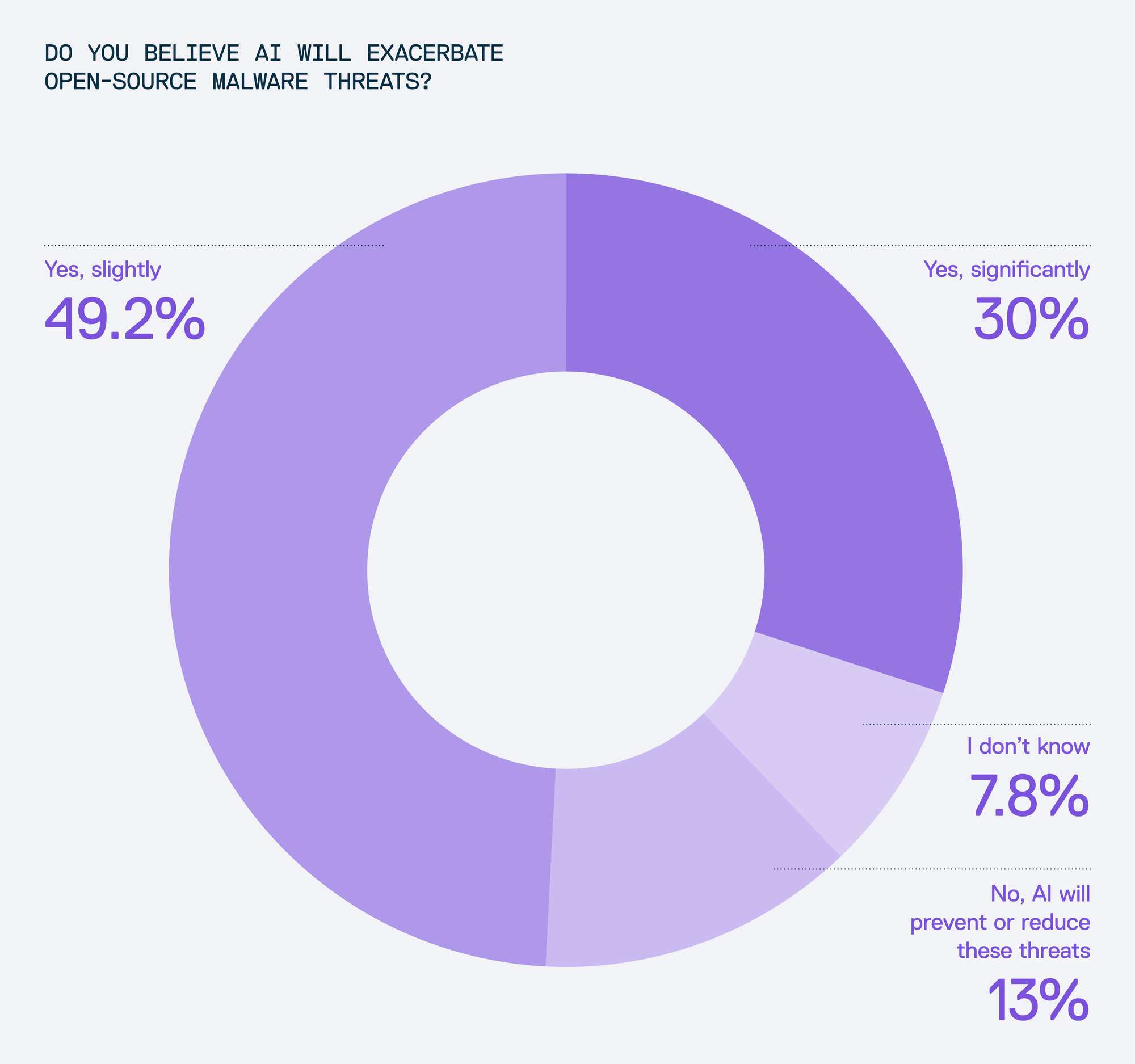

These concerns are further amplified by the widespread adoption of Large Language Model (LLM)-based technologies. While LLMs can significantly boost developer productivity, they also pose new threats (such as recommending non-existent or harmful packages). When asked whether AI would worsen open-source dependency chain abuse risks like typosquatting or dependency confusion, nearly 80% of respondents agreed it would, with almost a third warning of a significant rise in exposure.

These evolving development patterns are expanding an already vulnerable software supply chain. Without rigorous artifact validation and integrity checks, developers face growing risks - not just from external attackers, but also from the very tools designed to help software development teams move faster.

To address these emerging threats, modern artifact management solutions must embed intelligent access controls and offer end-to-end visibility into artifact provenance. With dynamic access control policies, organizations can ensure only authorized users and processes can interact with sensitive assets, reducing the likelihood of unauthorized changes or malware insertion. Coupled with robust policy-as-code frameworks, enterprises can establish and enforce security protocols that adapt as threats evolve, especially in environments where AI-generated code is becoming commonplace.

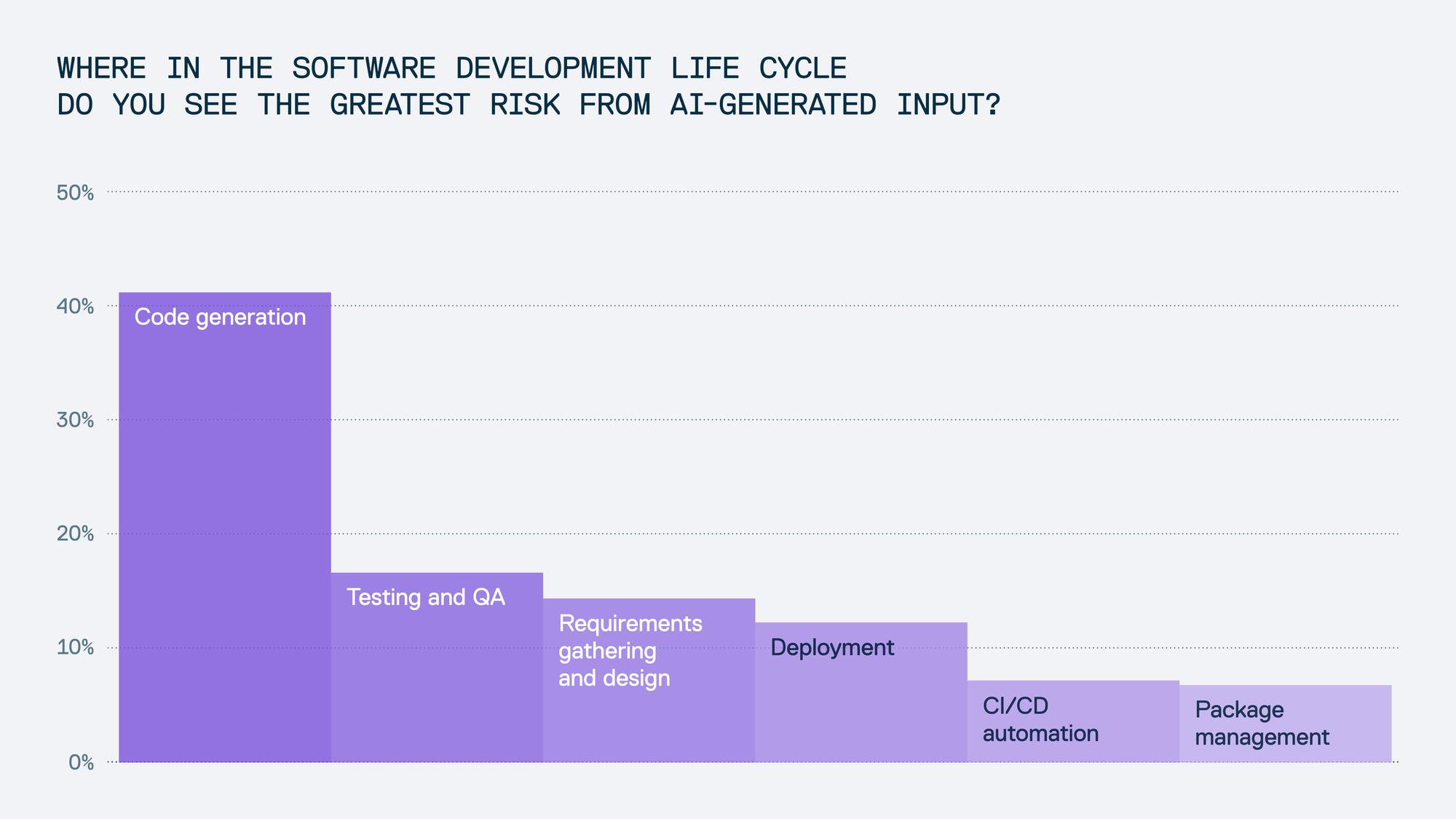

Where in the SDLC are AI-generated risks coming from?

While the speed and efficiency benefits of using AI in software development are clear, the oversight is not.

Only 67% of developers surveyed review AI-generated code before every deployment, leaving large portions of production code potentially unvetted. This is quickly becoming a growing vulnerability in the software supply chain. This behaviour, driven by a desire to move faster, is dramatically expanding the attack surface. AI isn’t just introducing new code, it’s also introducing new risks, often at scale. Traditional concerns like artifact integrity, dependency management, and SBOMs (Software Bill of Materials) are being compounded by AI’s ability to rapidly consume and reuse unknown or untrusted code.

41% of respondents identified code generation as the riskiest point of AI influence, yet practices around review and trust remain inconsistent:

- 66% said they only trust AI-generated code after manual review.

- Just 20% fully trust AI output without extra scrutiny.

- 59% apply additional reviews to AI-generated packages, but only 16% treat them like any other package, without further checks.

The Need for Secure Artifact Management in the AI Era

This uneven approach is happening against a backdrop of expanding AI usage: alarmingly, 86% of organizations have seen more AI-influenced dependencies in the last year, with 40% seeing a significant increase. Yet, only 29% of teams feel very confident in their ability to detect malicious code in open-source libraries, which is the very ecosystem where AI tooling tends to source its suggestions. The threats, according to those surveyed, range from sensitive data leakage to the inclusion of compromised dependencies. These risks are amplified when developers blindly trust AI-generated code or package suggestions without security oversight.

These findings point to a critical inflection point. AI is becoming a core contributor to the software stack, but we haven’t fully adapted our trust models, tooling, or policies to match this new reality. Relying on developers to manually spot every risk (especially under time pressure) is not sustainable.

What’s needed is a secure checkpoint for AI-assisted development:

- Automatically-enforced policies to catch unreviewed or untrusted AI-generated artifacts.

- Artifact provenance tracking to distinguish between human-authored and AI-authored code.

- Integration of trust signals directly into the development pipeline, so that reviews become automatic rather than optional.

Download the 2025 Cloudsmith Artifact Management report

Explore the latest trends, insights, and best practices for securing your software supply chain and managing AI-generated code responsibly.

Download - 2025 Cloudsmith Artifact Management Report

Webinar - From AI to Scalability: 2025 Trends in Artifact Management

Liked this article? Don\'t be selfish (:-), share with others: Tweet